Social media is the equivalent of the marketplace of ideas on steroids. Billions of individuals provided multiple mediums of self-expression through channels. While humans do not always have filters, we expect social and legal conventions to correspond to the interactions that occur through these channels. This is the issue of “content moderation.” The elimination of certain words, topics, and images on social media to avoid hateful or inexplicit material to go to an unsuspecting user. The recent revelations that U.S. military personnel used Facebook to share nude photos of their colleagues drew criticism that Facebook did not do enough to ensure that this type of egregious privacy violation was allowed.

But content moderation is a tricky business. Companies have a two-tiered system for it. First they outsource for basic content to be eliminated (extreme violence) and then internally have content moderation for more culturally sensitive areas. But this system is not without its flaws.

The BBC recently reported that Facebook content moderation is underwhelming in sexualized images of minors, with the BBC finding over 100 different pages with explicit material. After reporting to Facebook, the pages only 18 were taken down and then Facebook reported the BBC to the police instead of offering official comments (Note: all the pages have been taken down). While Facebook has been rightly criticized for this, it shows the imperfections of both expectations and the technology in providing online forums that uphold a universal sense of decency.

Decency is impossible to be a universal standard. The legal definition of obscenity is based on “knowing it when I see it” which is reactive in nature and means that there will be huge grey areas in which one group will see art and the other will see pornography. For example, Facebook was criticized for eliminating the “Napalm Girl” image from the Vietnam War. This disturbing image, which probably offends many, is widely considered to be an important image for describing the horrible nature of the conflict and the civilian causalities that resulted from it. If a picture is worth a thousand words, who gets to write those words?

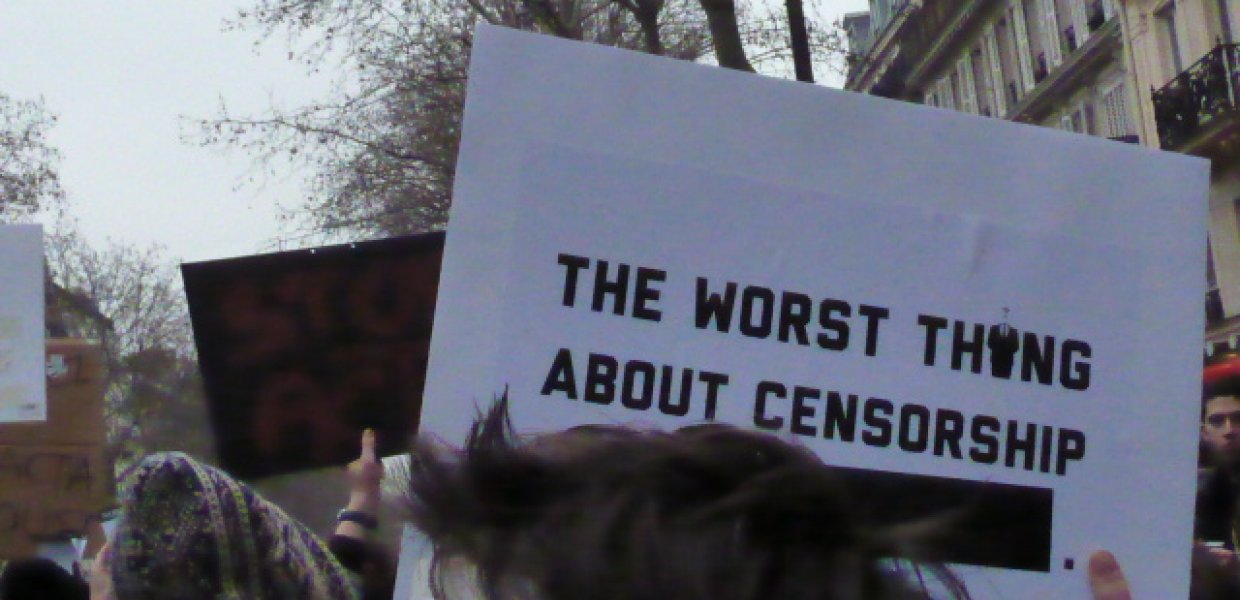

There are numerous examples of Facebook being criticized for eliminating images that have been art pieces, but feature suggestive material. There may be a low threshold we can state for indecency, but how can we moderate content that is open to interpretation? The ability to create a standard for decency will mean that censorship will occur, and the threat of censorship will hurt the exchange of ideas because individuals will opt out of sharing what they believe may be taken down or could lead to them being banned from a specific social media channel. The recent Trump protests by women that went topless led to some Facebook profiles being banned. It seems that we need to view Facebook as a regulated form of self-expression or Facebook needs to create a system that would allow less regulation. The latter seems impossible. Both legally and morally it is important to shield minors from images and hate speech. And we are still relying on humans for content moderation, which will inevitably lead to mistakes. Therefore, the former, the realization that Facebook is not a medium for self-expression, but a particular brand of self-expression needs to be made clear. Individuals need to be better at creating alternatives or being creative about how to create their ideas on independent forums/websites and link it to Facebook. This could allow Facebook to focus more on the most egregious violations, like the marine scandal or sexualization of minors.

Flickr / Photo by Lamessen.